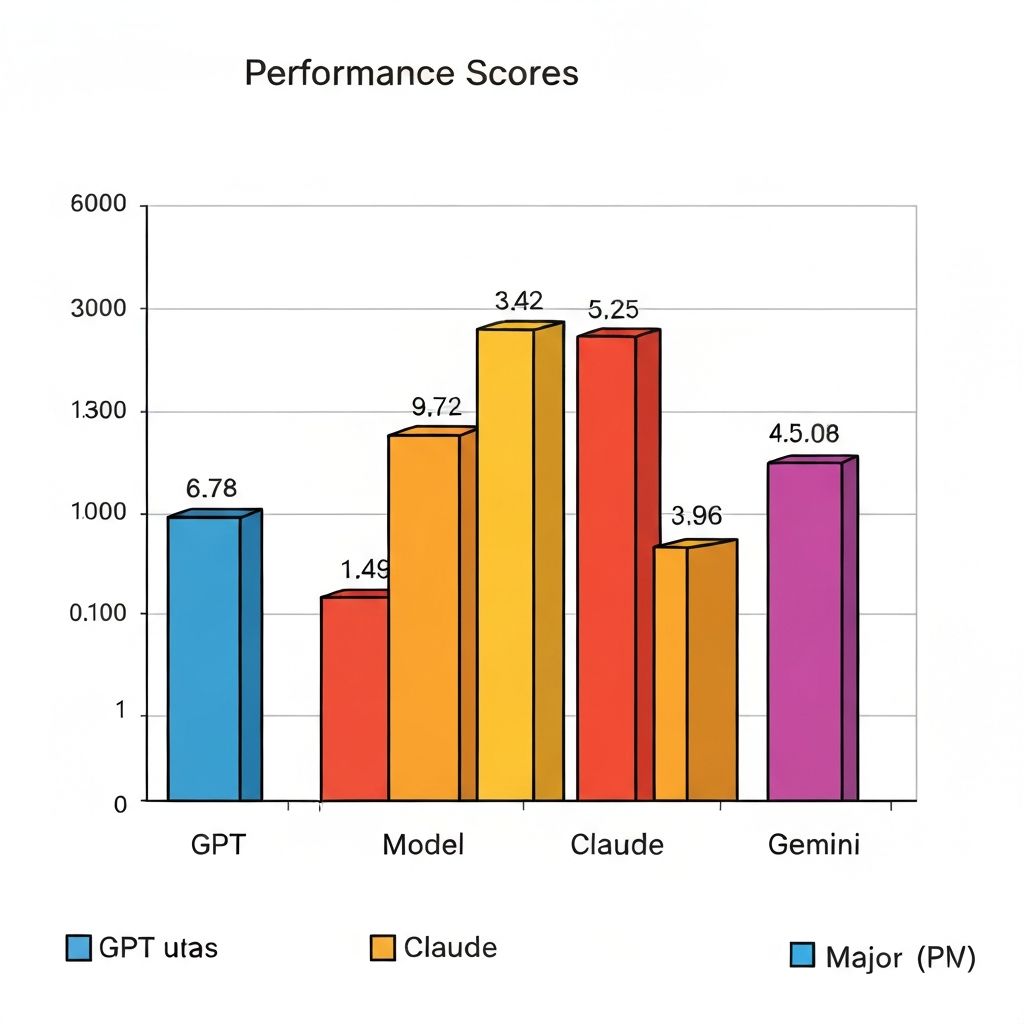

Benchmark Evals

for Safety & Risk Use Cases

The most comprehensive evaluation benchmark for assessing generative AI performance across real-world safety and risk use cases. Know what model performs best for your work tasks.